🚀 Zero Downtime Deployment with Azure App Service

Introduction

With Astro we can generate a static site that is deployed to Azure App Service and flies: top performance, Lighthouse metrics close to 100, and with Content Island we can modify the content in a friendly way.

In addition, we can connect a custom GitHub webhook to automatically deploy every time we push to the main branch.

Within Azure App Service, we can create two different types of applications:

Static Web App: for static sites, ideal for Astro.Web App: for more complex applications, where in addition to serving static files, we can run server-side code (such as a REST API).

In this post we will focus on Web App where the frontend application and a REST API are containerized in a single Docker container that is deployed in Azure App Service with a Linux operating system.

But there is a problem:

👉 Every time we make a change to the site, the deployment can cause downtime, because Azure App Service has to spin up a container in the production instance, and this can cause our site to be down for 30 / 60 seconds, which gives a bad image to our clients.

So, how do we avoid this outage?

The answer: Azure App Service Slots.

In this post we will start from an automatic deployment workflow with GitHub Actions and see how to implement Slots in Azure to have zero downtime deployment.

⚡ TL;DR

Azure App Service slots allow you to deploy in a staging environment. Once everything is ready (even with warmup if necessary), we do a swap with the production environment. Result: deployment without downtime.

🧨 The problem

We have a webhook that launches a deployment every time the content in Content Island is updated. This webhook runs the following GitHub Actions workflow:

name: Deploy

on:

push:

branches:

- main

env:

IMAGE_NAME: ghcr.io/${{github.repository}}:${{github.run_number}}-${{github.run_attempt}}

permissions:

contents: "read"

packages: "write"

id-token: "write"

jobs:

cd:

runs-on: ubuntu-latest

environment:

name: Production

url: https://my-app.com

steps:

- name: Checkout repository

uses: actions/checkout@v4

- name: Log in to GitHub container registry

uses: docker/login-action@v3

with:

registry: ghcr.io

username: ${{ github.actor }}

password: ${{ secrets.GITHUB_TOKEN }}

- name: Build and push docker image

run: |

docker build -t ${{ env.IMAGE_NAME }} .

docker push ${{env.IMAGE_NAME}}

- name: Login Azure

uses: azure/login@v2

env:

AZURE_LOGIN_PRE_CLEANUP: true

AZURE_LOGIN_POST_CLEANUP: true

AZURE_CORE_OUTPUT: none

with:

client-id: ${{ secrets.AZURE_CLIENT_ID }}

tenant-id: ${{ secrets.AZURE_TENANT_ID }}

subscription-id: ${{ secrets.AZURE_SUBSCRIPTION_ID }}

- name: Deploy to Azure

uses: azure/webapps-deploy@v3

with:

app-name: ${{ secrets.AZURE_APP_NAME }}

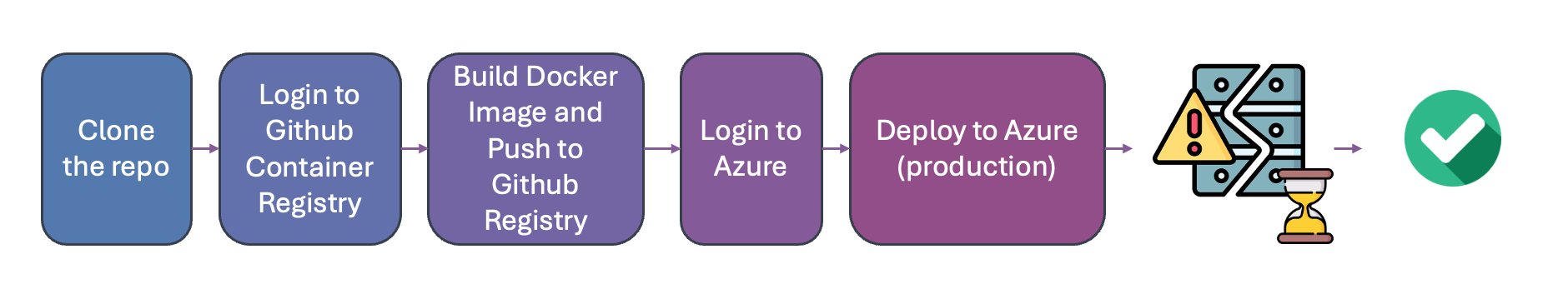

images: ${{env.IMAGE_NAME}}What does this workflow do?

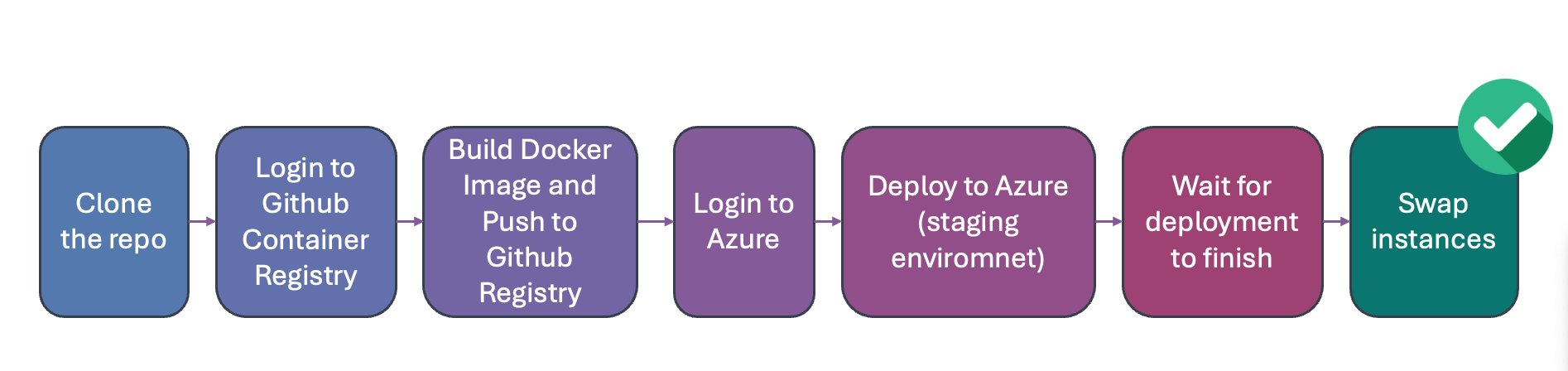

- Clone the repository

uses: actions/checkout@v4- Log in to GitHub Container Registry

uses: docker/login-action@v3- Build and push the Docker image

docker build ...

docker push ...- Login Azure

uses: azure/login@v2More info: Azure Login Documentation

- Deploy to Azure App Service

uses: azure/webapps-deploy@v3❌ Where is the problem?

Azure App Service does not have a native mechanism for zero-downtime swaps on a single instance.

When you make a new deployment:

- The previous container is stopped.

- The new image is downloaded from the

registrywe are pointing to (in this case, GitHub Container Registry). - A new container with the new image is started.

- And during that process… the site is down for a few seconds or even minutes.

This translates into a bad user experience.

✅ The solution: Azure App Service Slots

Azure offers a feature called Deployment Slots: additional environments where you can deploy preview versions of your app without affecting production.

🔢 Some facts:

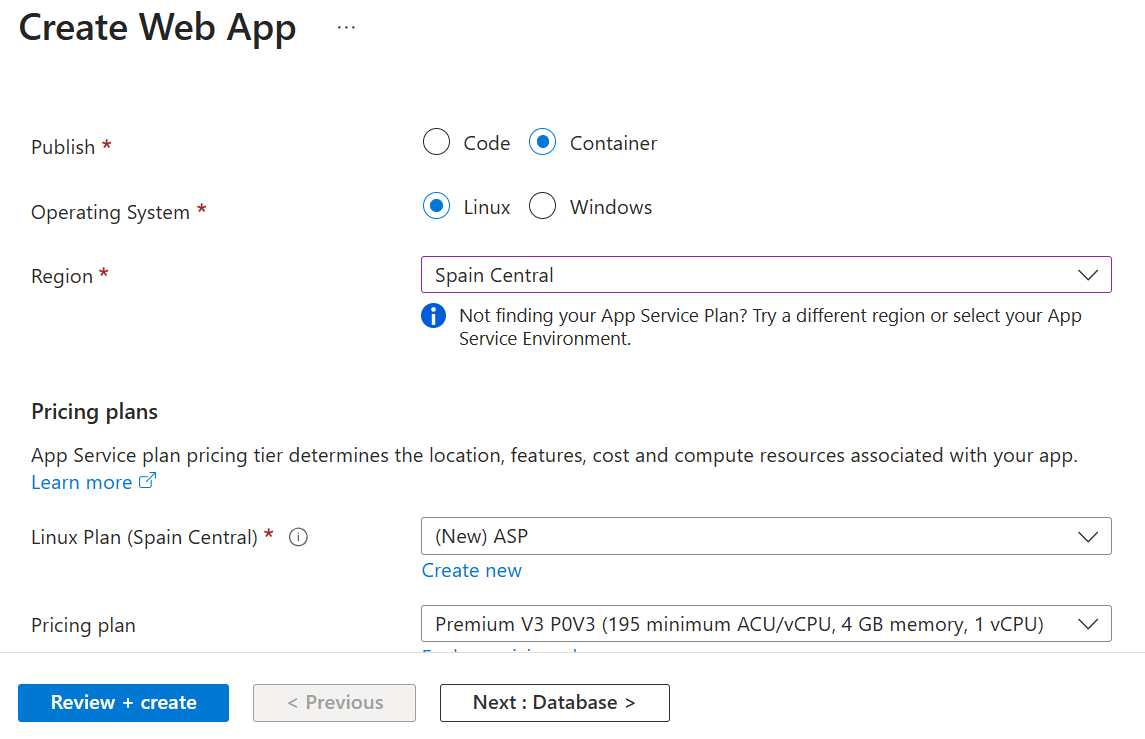

- Available in Premium plans and above (approx.: from €56/month), comparison table.

- You can have up to 20 slots per App Service in a Premium plan, in theory you can deploy up to 20 applications with their staging slot in that plan (of course resource limitations apply).

- Slots are available in both Windows and Linux.

- In this example we will use Linux (more economical, but requires an additional step).

🛠️ How do we do it?

A first approach with a deploy in staging and manual step to production:

- Create an App Service associated with a Premium App Service plan.

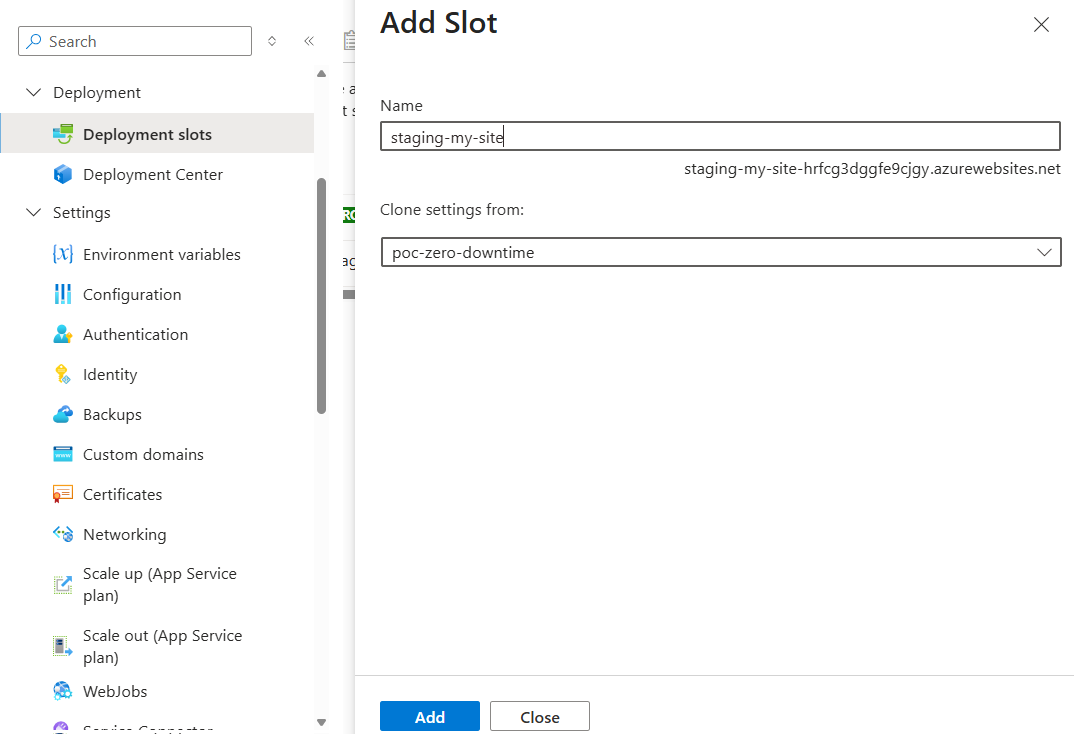

- Go to "Deployment slots" and create a new one, for example:

staging-my-site.

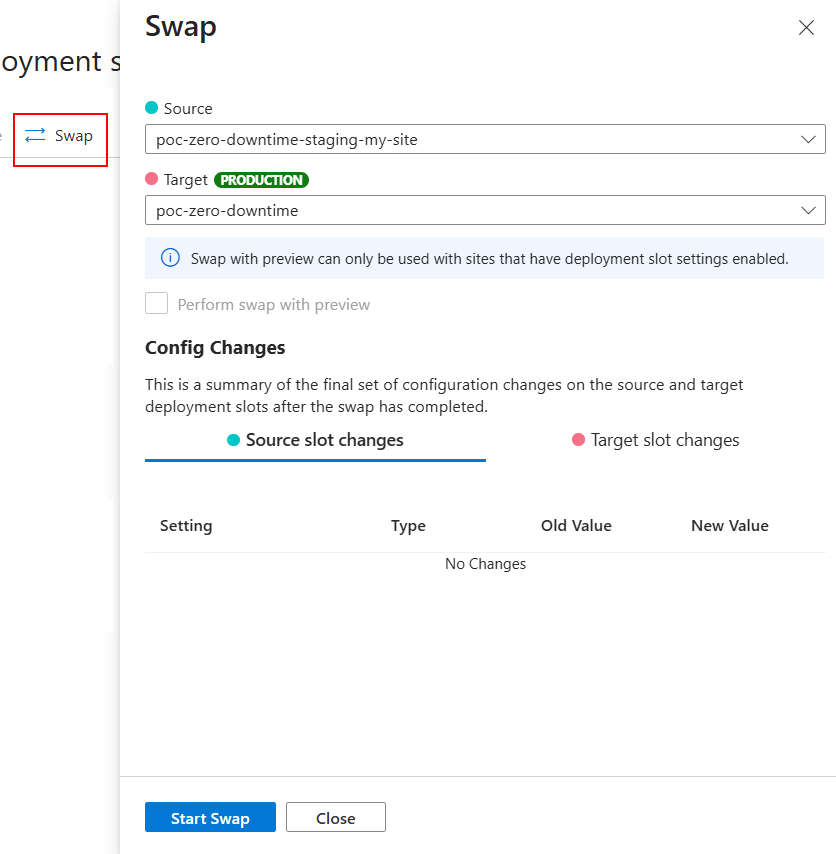

- Execute a manual

Swap.

“Okay, this is fine… but I’m not going to do this manually every time I deploy.”

And you’re absolutely right.

🧪 Automating the swap

We are going to automate the swap with GitHub Actions.

Modify the GitHub Actions workflow to deploy in the staging-my-site slot (instead of production).

Add a text or visual change to know that this version is the new one.

Launch the workflow.

Check that you have two active versions: one in production and one in

staging-my-site.

Result: zero downtime.

- name: Deploy to Azure

uses: azure/webapps-deploy@v3

with:

app-name: ${{ secrets.AZURE_APP_NAME }}

+ slot-name: staging-my-site

images: ${{env.IMAGE_NAME}}

+ - name: Swap

+ run: |

+ az webapp deployment slot swap + --resource-group ${{ secrets.AZURE_RESOURCE_GROUP }} + --name ${{ secrets.AZURE_APP_NAME }} + --slot staging-my-site + --target-slot production😬 The detail

If you swap right after deploying to staging-my-site, the container may not yet be ready. If you swap too soon, you end up with downtime again.

💰 Paid option: Windows

If you use the Windows operating system instead of Linux, there is an auto swap flag that makes Azure wait until the container is ready before swapping.

We discard this since it implies an extra cost, and on the other hand we stop working in Linux.

🕒 Simple option: Fixed wait

A quick way (although not perfect) is to add a wait before swapping:

- name: Deploy to Azure

uses: azure/webapps-deploy@v3

with:

app-name: ${{ secrets.AZURE_APP_NAME }}

slot-name: staging-my-site

images: ${{env.IMAGE_NAME}}

+ - name: Wait before swap

+ run: sleep 120 # wait 2 minutes

- name: Swap✅ Easy to implement

❌ Can fail if the container takes longer than expected

🧠 Advanced option: Healthcheck + active wait

A more robust option is to perform a healthcheck before swapping.

How?

- On your site add an

/api/healthendpoint that returns200 OKwhen the site is ready. - In the GitHub Actions workflow, before swapping:

- Make a request to

/api/healthon thestaging-my-siteslot. - Wait until you receive a

200 OK. - If it fails, wait a few seconds and retry.

- If everything is OK, perform the swap.

- Make a request to

- name: Deploy to Azure

uses: azure/webapps-deploy@v3

with:

app-name: ${{ secrets.AZURE_APP_NAME }}

slot-name: staging-my-site

images: ${{env.IMAGE_NAME}}

+ - name: Waiting for deployment to finish

+ run: |

+ sleep 30

+ curl + --retry 20 + --retry-delay 10 + --retry-connrefused + "${{ secrets.STAGING_HEALTH_CHECK_URL }}"

- name: SwapHere’s what we do:

- First, wait 30 seconds to give the container time to start (to avoid making a request to the old version still deployed).

- Then make a

curlrequest to the healthcheck URL of thestaging-my-siteslot. - If the request fails, retry up to 20 times with a delay of 10 seconds between attempts.

- If all goes well, perform the swap.

🔄 Full workflow with slots and wait

name: Deploy

on:

push:

branches:

- main

env:

IMAGE_NAME: ghcr.io/${{github.repository}}:${{github.run_number}}-${{github.run_attempt}}

permissions:

contents: "read"

packages: "write"

id-token: "write"

jobs:

cd:

runs-on: ubuntu-latest

environment:

name: Production

url: https://my-app.com

steps:

- name: Checkout repository

uses: actions/checkout@v4

- name: Log in to GitHub container registry

uses: docker/login-action@v3

with:

registry: ghcr.io

username: ${{ github.actor }}

password: ${{ secrets.GITHUB_TOKEN }}

- name: Build and push docker image

run: |

docker build -t ${{ env.IMAGE_NAME }} .

docker push ${{env.IMAGE_NAME}}

- name: Login Azure

uses: azure/login@v2

env:

AZURE_LOGIN_PRE_CLEANUP: true

AZURE_LOGIN_POST_CLEANUP: true

AZURE_CORE_OUTPUT: none

with:

client-id: ${{ secrets.AZURE_CLIENT_ID }}

tenant-id: ${{ secrets.AZURE_TENANT_ID }}

subscription-id: ${{ secrets.AZURE_SUBSCRIPTION_ID }}

- name: Deploy to Azure

uses: azure/webapps-deploy@v3

with:

app-name: ${{ secrets.AZURE_APP_NAME }}

slot-name: staging-my-site

images: ${{env.IMAGE_NAME}}

- name: Waiting for deployment to finish

run: |

sleep 30

curl --retry 20 --retry-delay 10 --retry-connrefused "${{ secrets.STAGING_HEALTH_CHECK_URL }}"

- name: Swap

run: |

az webapp deployment slot swap --resource-group ${{ secrets.AZURE_RESOURCE_GROUP }} --name ${{ secrets.AZURE_APP_NAME }} --slot staging-my-site --target-slot production💰 Costs and considerations

- The Premium Azure App Service plan starts from approx. €56/month you can check exact prices in the Azure calculator.

- Includes up to 20 slots, so you can have several apps running in parallel.

- You can:

- Scale vertically (more CPU, more RAM).

- Scale horizontally (more instances).

- If you perform a swap and something goes wrong, you can swap again and return to the previous version.

🧾 Conclusions

The use of Azure App Service Slots allows you to:

✅ Deploy without downtime

✅ Have staging environments ready to validate

✅ Roll back easily in case of error

✅ Maintain a professional experience for your users

All this without complicating yourself with low-level infrastructure.